Distracted driving is one of the leading causes of car accidents - in the United States, more than 9 people are killed and more than 1,153 people are injured each day in crashes that are reported to involve a distracted driver. The distractions can be visual (i.e., taking your eyes off the road), manual (i.e., taking your hands off the wheel) or cognitive (i.e., taking your mind off the road because of cell-phone use/alcohol/drugs, etc) in nature. Within these, cell-phone use and driving under the influence are two of the leading causes of accident fatalities.

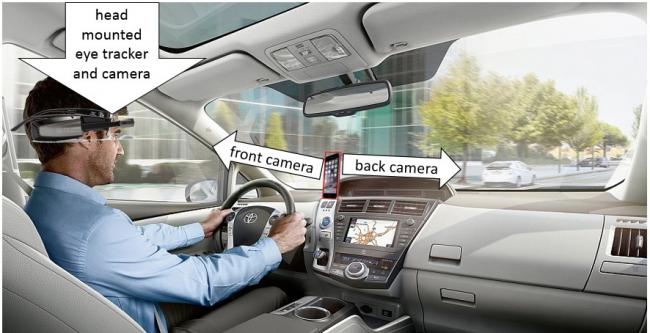

To tackle these problems, we propose a system that combines an internal camera facing the driver to estimate driver's gaze direction (e.g., the direction at which the driver is looking) and a host of cameras looking at the environment (both internal and external). Using the gaze direction and the video of the environment, we can estimate exactly at which scene elements and objects the driver is currently looking. By coupling this information with where the driver should be looking, we can estimate driver's performance. This will allow us to build early warning systems that adapt to the response time of the driver.

Our ultimate goal is to use the response time and gaze data to build a system that understands the state-of-mind of the driver. Specifically, we aim to be able to predict whether a driver is tired, under the influence, experiencing road rage or simply performing other actions that diminish his attention. This is extremely important as the type of action that should be taken by the driving assistance system can vary significantly based on the specific state-of-mind of the driver.

This is a continuation of the project "Predicting a Driver's State-of-Mind" by the same PIs.

Publications:

-

P. Kellnhofer, A. Recasens, S. Stent, W. Matusik, and A. Torralba, “Gaze360: Physically Unconstrained Gaze Estimation in the Wild,” in ICCV 2019, 2019, doi: 10.1109/ICCV.2019.00701 [Online]. Available: https://doi.org/10.1109/ICCV.2019.00701

-

A. Recasens, P. Kellnhofer, S. Stent, W. Matusik, and A. Torralba, “Learning to Zoom: A Saliency-Based Sampling Layer for Neural Networks,” in Computer Vision – ECCV 2018, Cham, 2018, pp. 52–67 [Online]. Available: https://doi.org/10.1007/978-3-030-01240-3_4