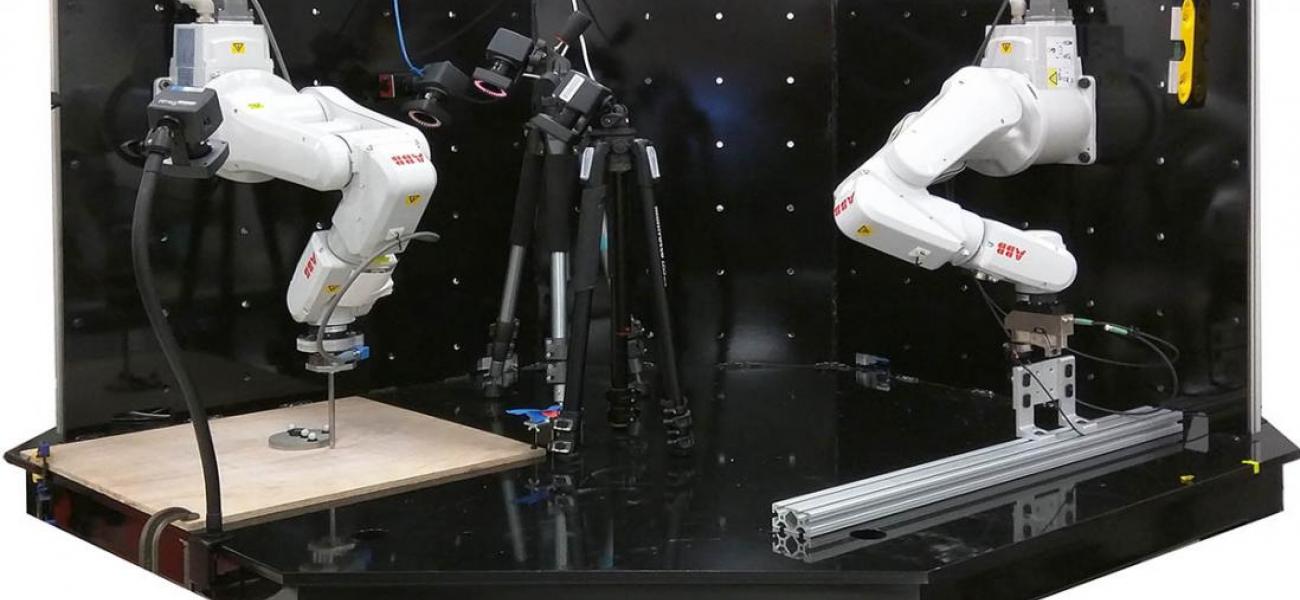

The Toyota-CSAIL Joint Research Center is aimed at furthering the development of autonomous vehicle technologies, with the goal of reducing traffic casualties and potentially even developing a vehicle incapable of getting into an accident.

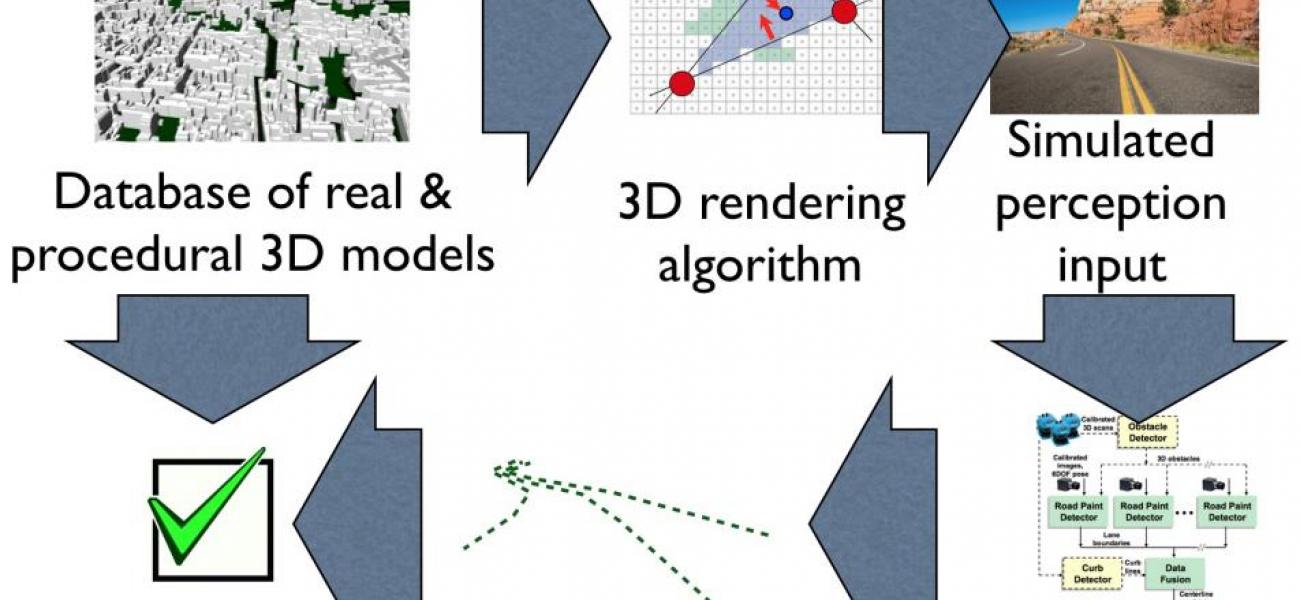

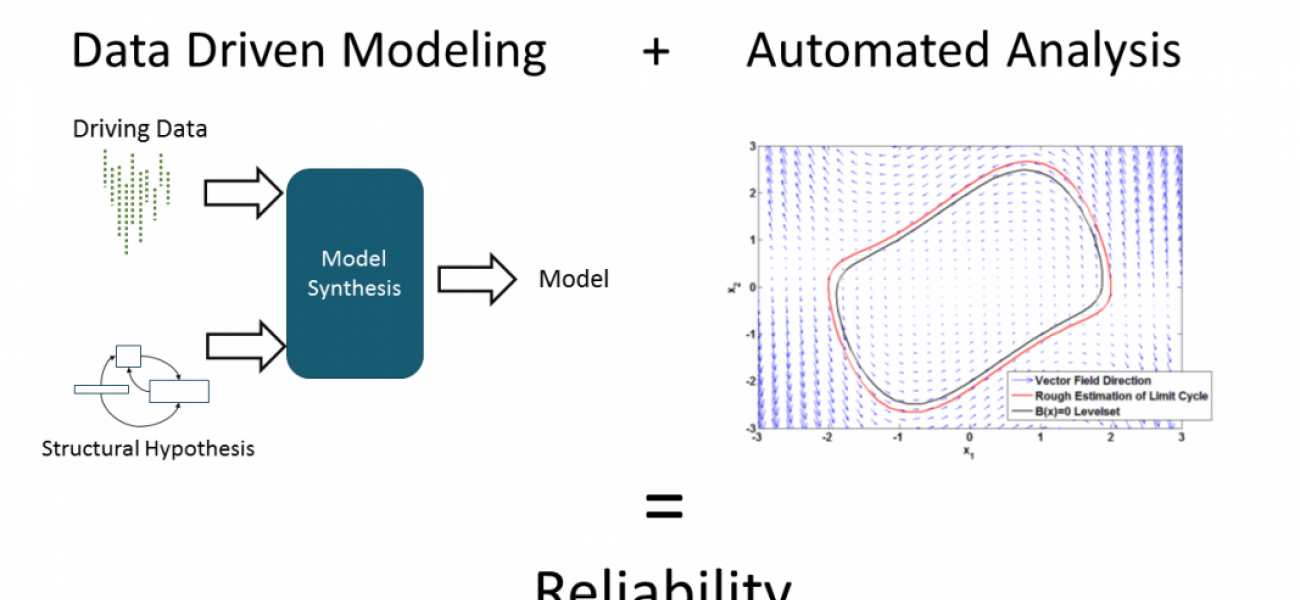

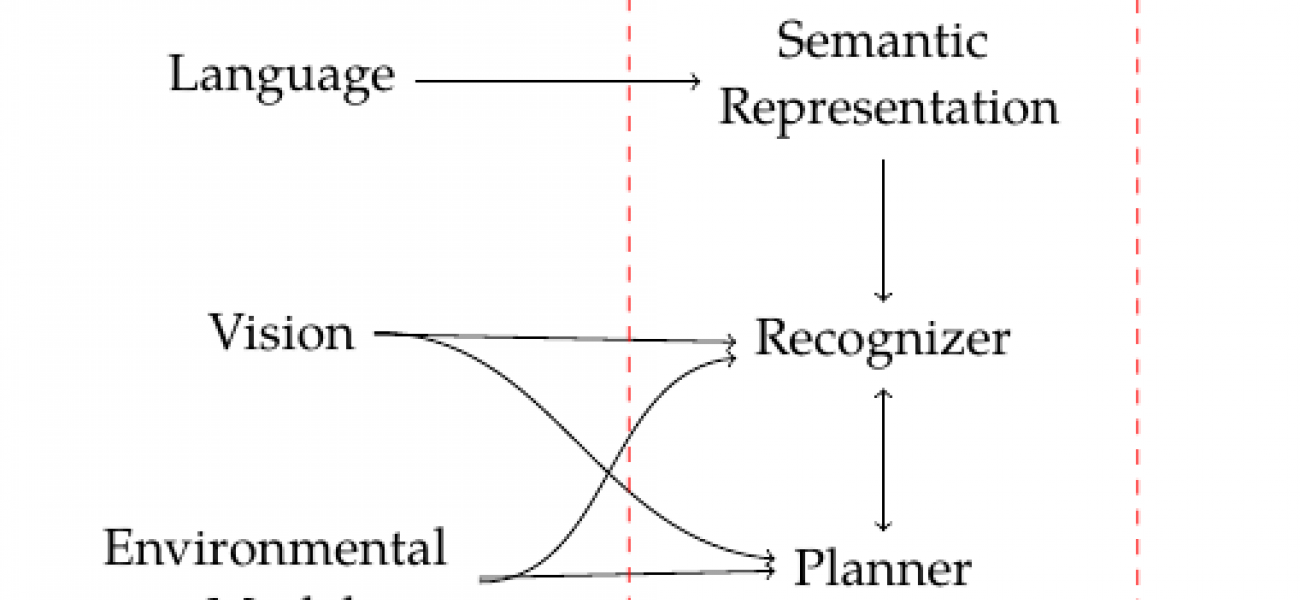

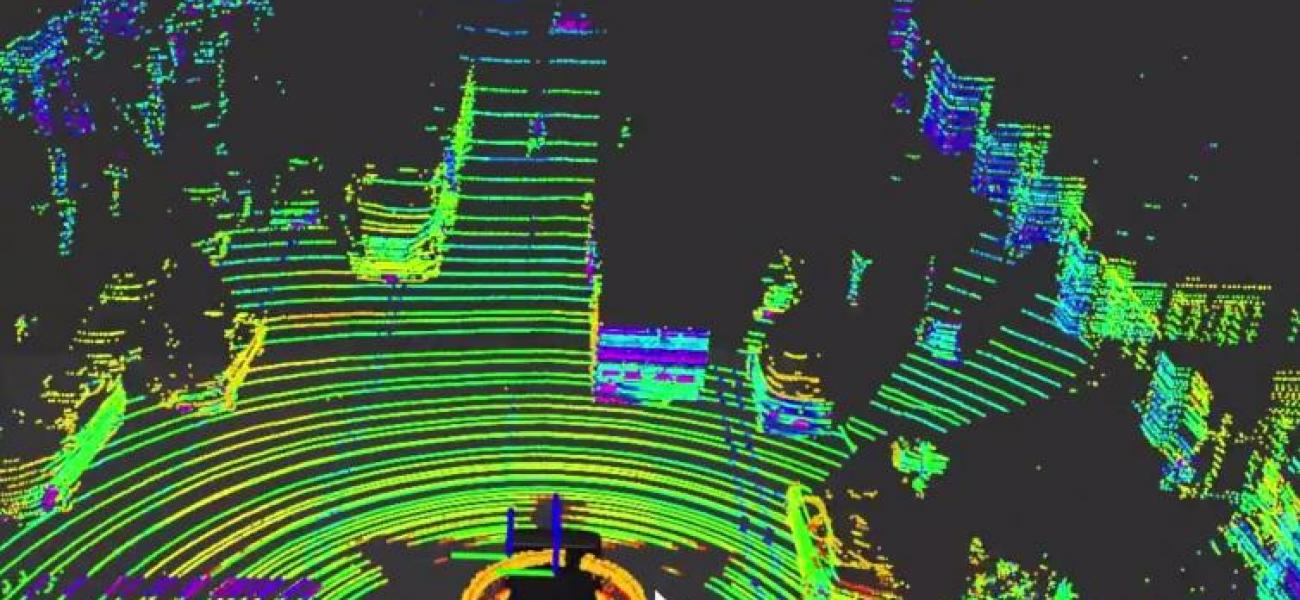

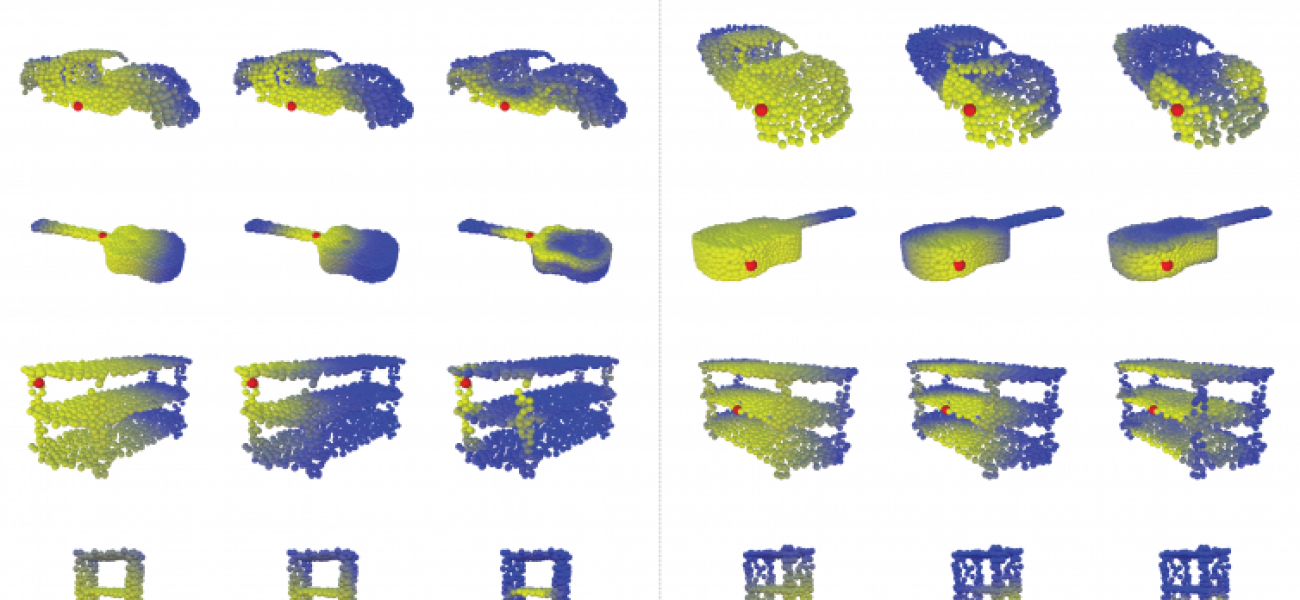

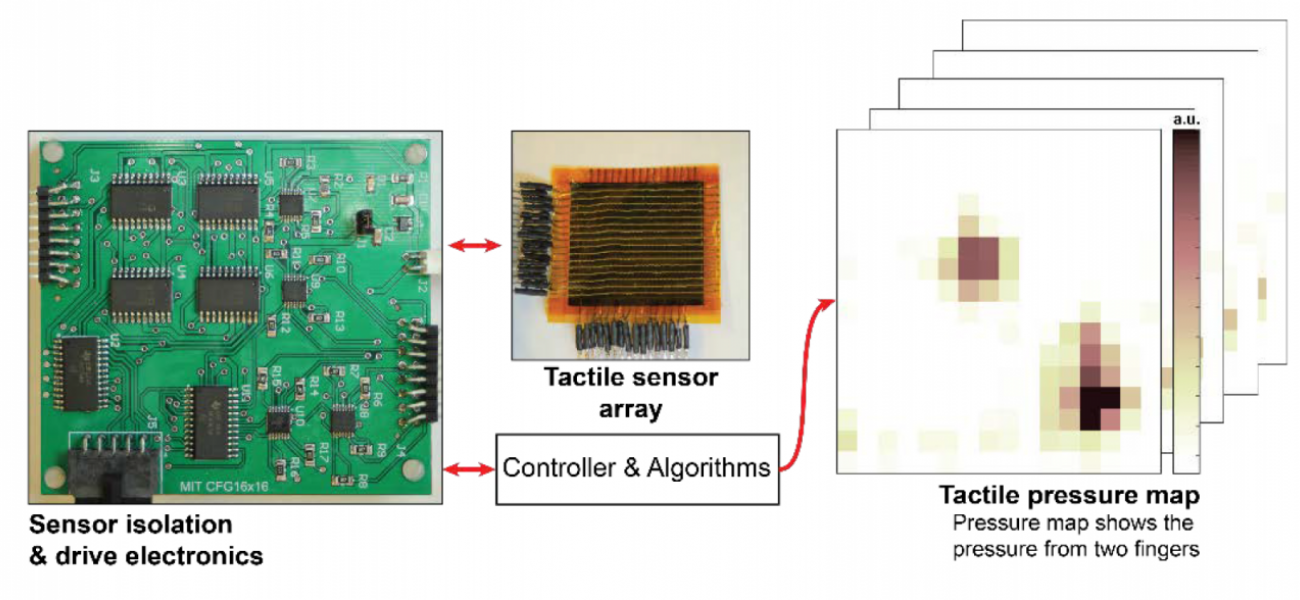

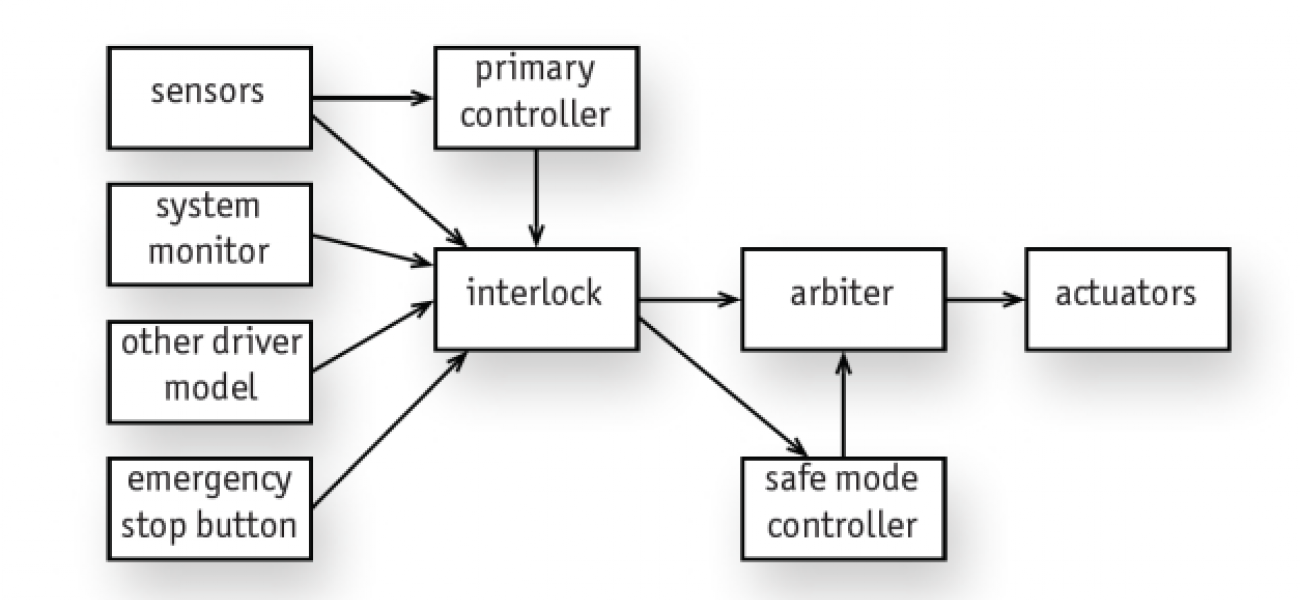

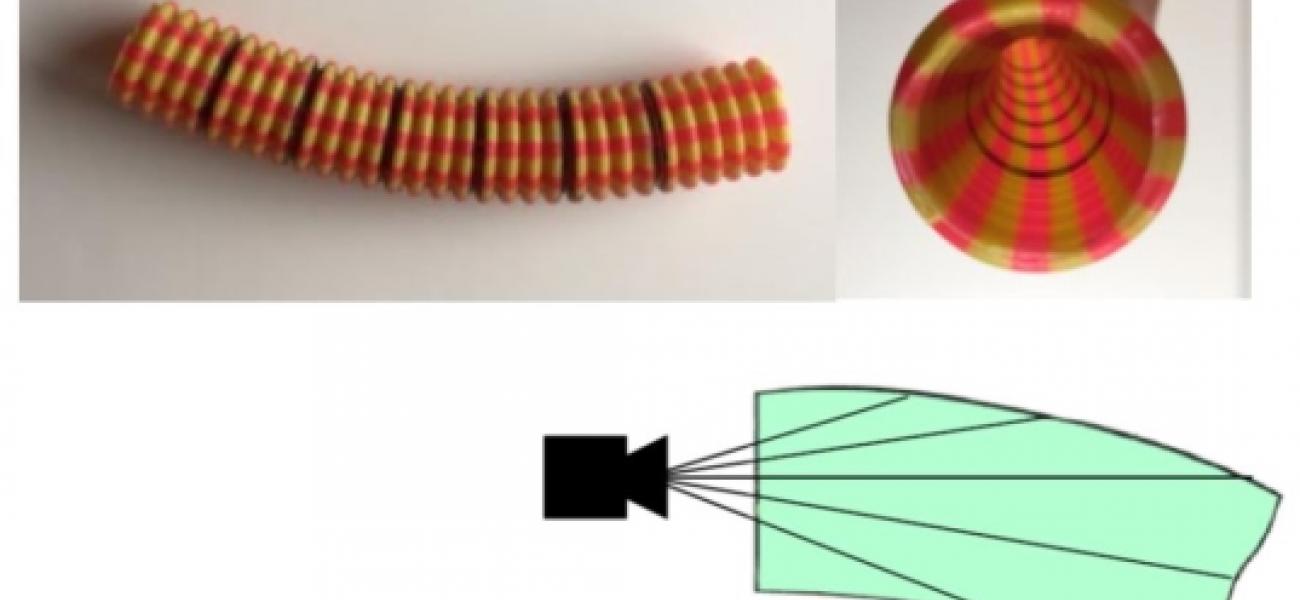

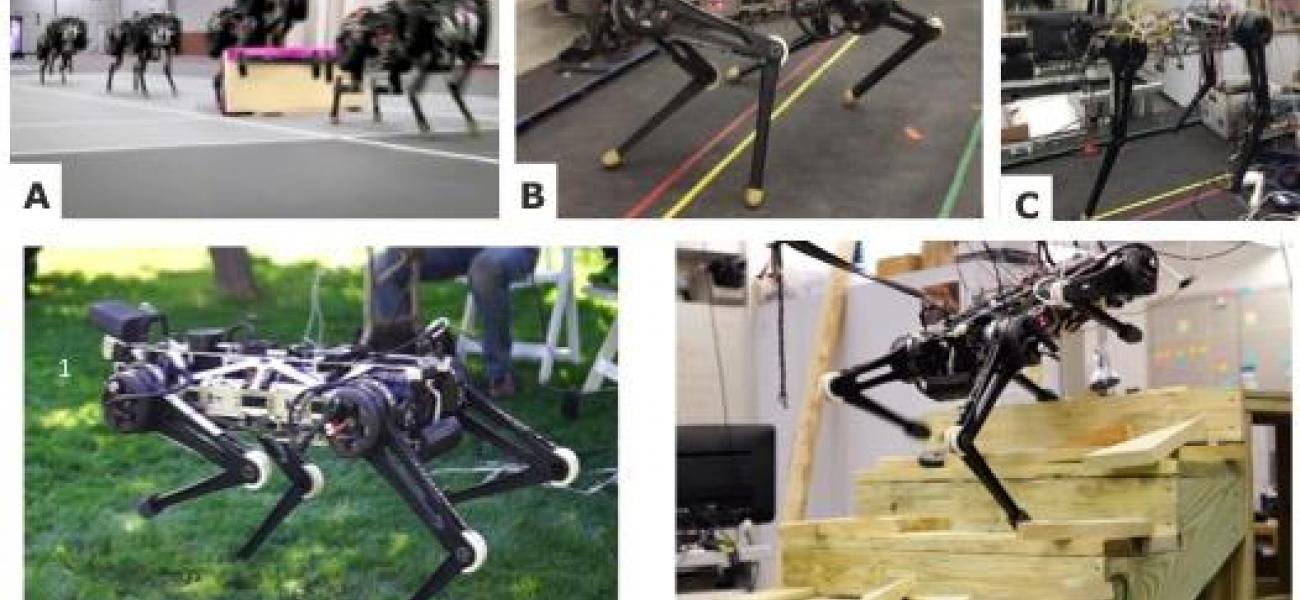

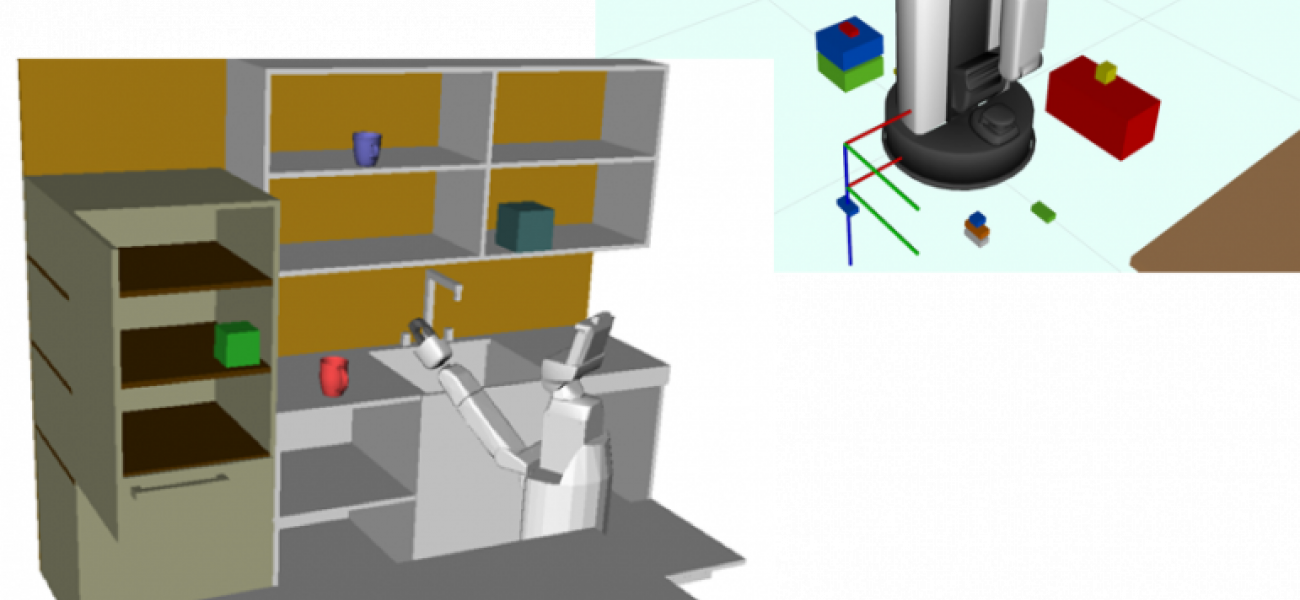

Led by CSAIL director Daniela Rus, the new center will focus on developing advanced decision-making algorithms and systems that allow vehicles to perceive and navigate their surroundings safely, without human input. Researchers will tackle challenges related to everything from computer vision and perception to planning and control.